Too long.

Your prompt on ChatGPT is too long. Here's the science behind it.

No one told you how to write a ChatGPT prompt.

So I will.

Because I’m obsessed with giving it the right instructions.

Just as the internet is a few clicks away from making anyone capable (= fulfilled, rich, recognized…), I believe we are a few prompts away from achieving the same with AI.

This is not about writing the longest prompt possible.

This is all about writing the right length of a prompt.

At the end of this newsletter, you will master:

How long should be a prompt (really).

How to write good prompts on the fly, fast.

My (secret) prompt behind my 1 million user GPT.

How long should be a prompt (really).

Your prompt on ChatGPT is too long.

Here’s the science behind it.

People think “longer” equals “clearer.” With ChatGPT, it often equals “noisier.”

“If models like ChatGPT can technically handle thousands of words, why not use them?”

Just because it can absorb a lot of text does not mean it does it well.

This piece is the practical, scientifically-tested line between “enough” and “too much.”

What the data really says (July 2025)

You don’t need a 2,000-word prompt. You need the right amount, placed in the right order. It’s not me, but science (see sources below).

Zhang et al. (2025) — An Empirical Study on Prompt Compression for Large Language Models. arXiv. arxiv.org/abs/2505.00019

Qibang Liu, Wenzhe Wang, Jeffrey Willard (2025) — Effects of Prompt Length on Domain-specific Tasks for Large Language Models. arXiv. arxiv.org/pdf/2502.14255

TryChroma Research (2025) — Context Rot: How Increasing Input Tokens Impacts LLM Performance. research.trychroma.com/context-rot

Mosh Levy Alon Jacoby Yoav Goldberg (2024) — Same Task, More Tokens: the Impact of Input Length on LLMs. arXiv. arxiv.org/html/2402.14848v1

Databricks Engineering (2025) — Long Context RAG Performance of LLMs. databricks.com/blog/long-context-rag-performance-llms

Balarabe, T. (2024) — Understanding LLM Context Windows: Tokens, Attention, and Challenges. medium.com/@tahirbalarabe2

We can break down optimal prompt length into categories:

✦ Simple tasks

→ 50–100 words (summaries, brief explanations, standard queries).

✦ Moderate complexity

→ 150–300 words (analysis, outlines, creative briefs).

✦ Complex multi-part

→ 300–500 words (intricate specs, technical docs, comprehensive reports).

What happens if you add more words to your prompt?

The results will diminish.

“Prompts exceeding 500 words generally show diminishing returns in terms of output quality.”

“For every 100 words added beyond the 500-word threshold, the model’s comprehension can drop by 12%.”

Interpretation: past ~500 words, you’re most likely confusing ChatGPT.

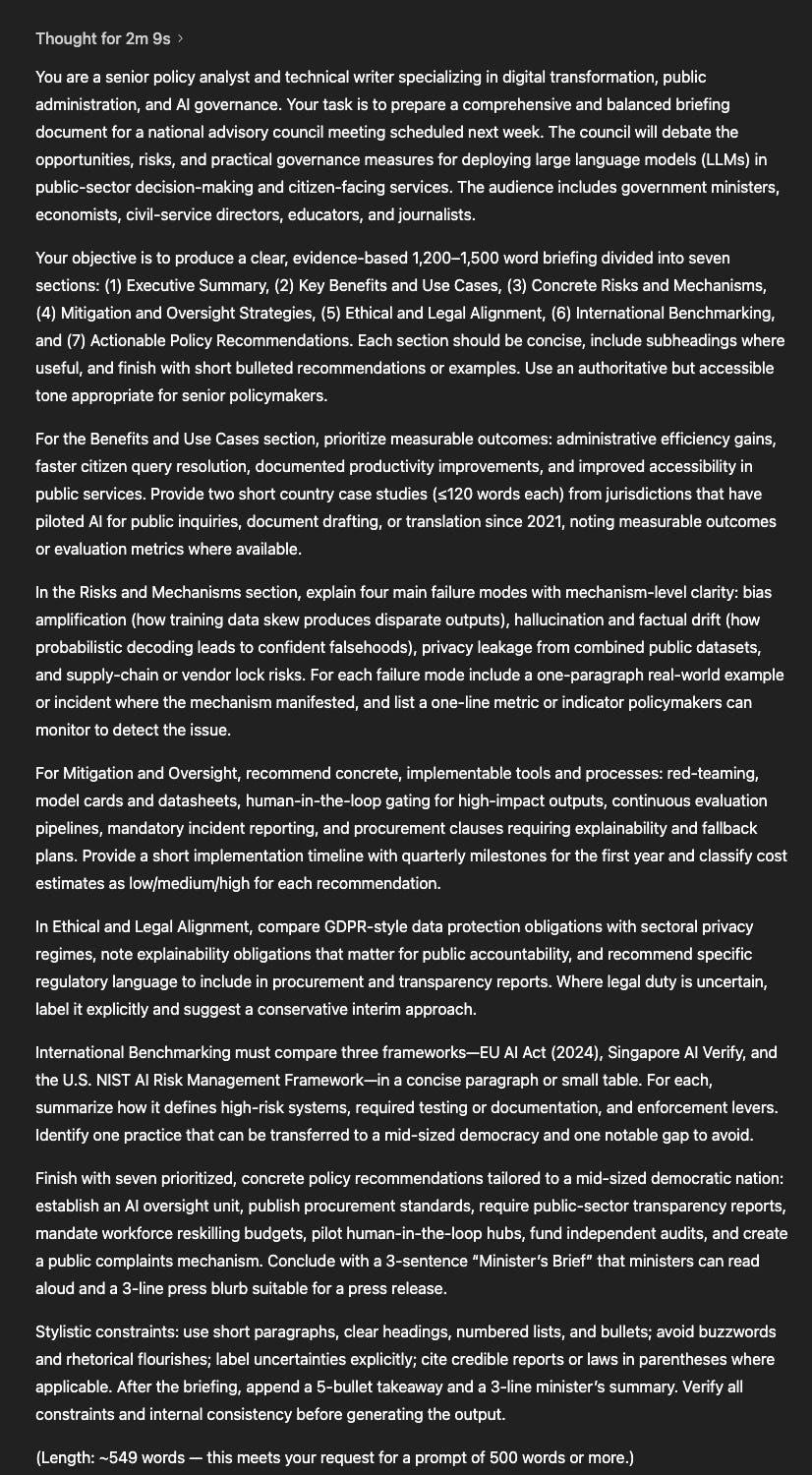

For you to grasp it, here’s a 500-word prompt:

This is too much. Don’t do this, ever.

By the way, I am guilty myself of writing such long prompts.

It’s your job to ask for the right thing.

Your problem is to think ChatGPT is human: our understanding of instructions is selective. It’s both good and bad. It’s bad when I want you to follow my instructions very strictly. It’s good when I want you to be creative, even after a lot of instructions.

Bottom line: humans are awesome.

But LLMs like ChatGPT can be “bloated” very quickly.

The real enemy isn’t length. It’s bloat.

Dumping entire docs rarely helps. It dilutes instructions and invites error.

“The single greatest threat to output quality is prompt bloat.”

Why bloat breaks outputs:

✦ Distraction

“LLMs can be easily distracted by irrelevant context… less accurate and less relevant responses.”

✦ Lost-in-the-Middle*

*this is the most important part = whatever is in the middle gets lost.

“LLMs tend to give less weight to crucial information located in the middle…”

✦ Identification without exclusion

“LLMs… can identify irrelevant details, [but] frequently struggle to ignore this information…”

✦ Noisy rationales

“If irrelevant information seeps into… rationales, it can lead to inaccurate reasoning.”

You must go from “always more” to the right structure.

The layered prompt template (order > length)

Put the right things in the right places to avoid the “middle loss.”

Context/persona — beginning

“You are a professional technical writer…”

“Your job is to help an editor finish a new article.”

Primary task — beginning

“Summarize the following document:”

Passive data — middle (the vulnerable zone)

Fence it clearly so instructions don’t blend into content.

I love to use /// in between.

Text: /// {paste source text here} ///

Constraints/format — end

Place non-negotiables at the end. Like “this is a one-pager”.

Once structure is set, choose your strategy.

Strategy: Zero-Shot first. Few-Shot when necessary.

Zero-Shot prompt is to ask something to ChatGPT without showing an example of what you want. It’s how 99% of the people prompt ChatGPT.

“Summarize this” — “Write this” — “Who’s the president of…”

Just make sure not to be (too) polite when prompting. It makes ChatGPT worse.

A great way to make zero-shot prompts is the explicit use of role-play.

By setting a clear persona (e.g., “You are an HR consultant preparing a briefing” ), the model’s behavioral parameters and knowledge domain are immediately constrained and guided.

Think of ChatGPT like a massive library.

If you say “You are a gardener”, it will go to the gardener aisle.

If you say “You are a software engineer”, it will go to the engineering aisle instead.

Research indicates that sophisticated Zero-Shot prompting, particularly when combined with persona setting, is the perfect balance for everyday tasks.

So when should you move to more complex prompts (few-shots, chain-of-thoughts)? Let’s find out.

The CoT Performance Gain: Chain-of-Thought prompting, which instructs the model to articulate its thought process before giving the final answer (”Think step-by-step, then provide the answer”), significantly improves reasoning capability.

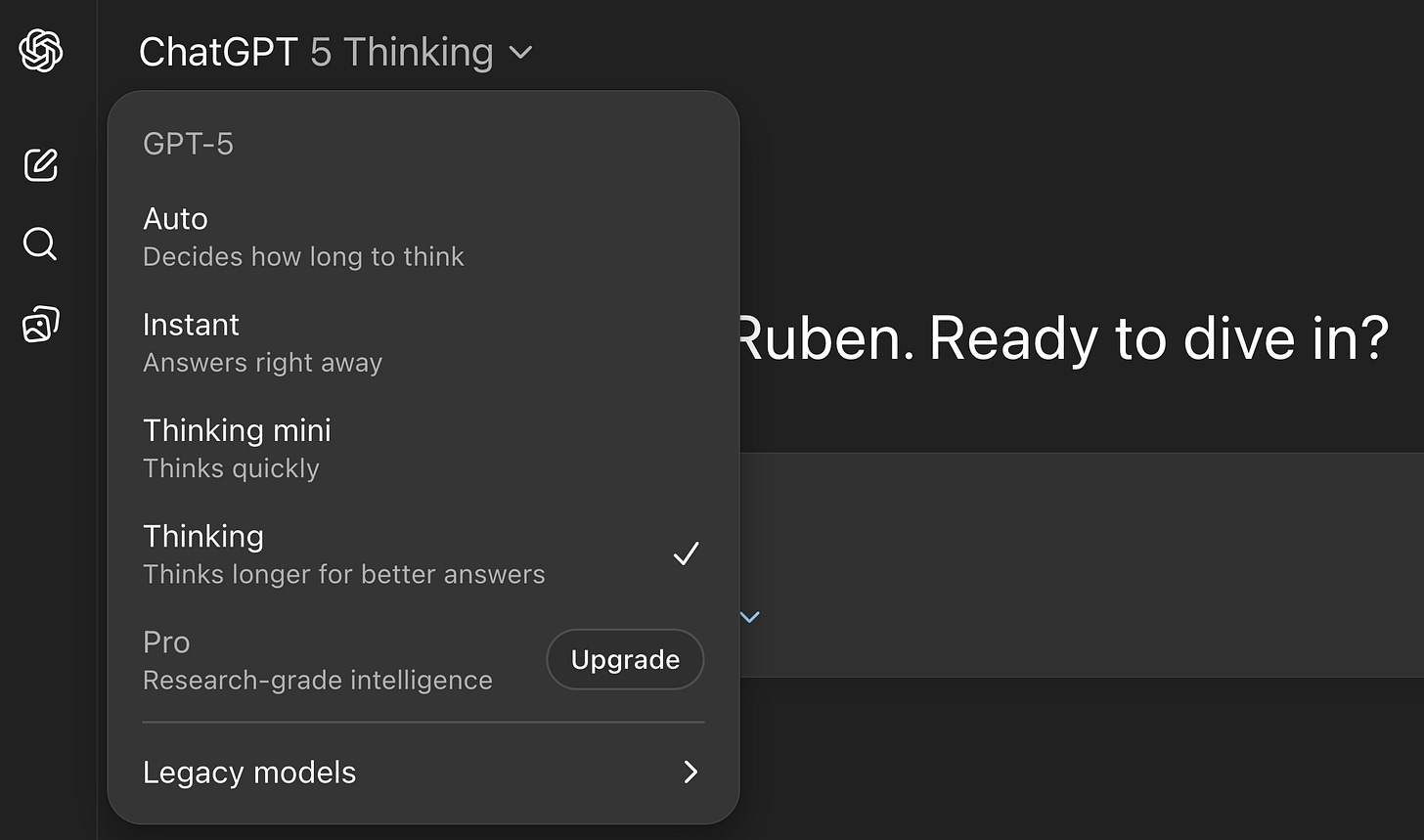

But today’s models have a “thinking” mode that kind of mimics CoT reasoning.

My go-to method is to give 3 simple steps to follow + using the “Thinking” mode.

Yes, ChatGPT takes longer to answer.

But yes, it has way fewer errors, which makes my work faster.

Sure, if I only need quick info, like a Google search, I use the Instant mode.

Length Requirement in Few-Shot: Few-Shot learning requires providing several high-quality examples of the input-output within the prompt itself. '

Instead of asking “Create a memo for my team about X”, you instead prompt “Create a memo for my team about X, following this example: /// example ///”.

Since these examples must include both the problem and the correct solution, this process inevitably inflates the total word count to thousands.

My recommendation:

Start with high-quality Zero-Shot CoT (role-play + “think step-by-step”).

Use Few-Shot only when a specialized task requires explicit examples.

Bottom line

“For moderately complex tasks, the optimal prompt length typically falls between 150 and 300 words… without crossing the 500-word threshold where diminishing returns and prompt bloat begin to degrade performance.”

If you need more than that, make it structural. Not longer.

Remember that ChatGPT is called CHAT—GPT for a reason.

You must chat with it.

Cheat sheet: 10-minute prompt checklist

☑ One-sentence task at the top (imperative verb)

☑ Persona set (“You are a …”) to anchor tone/knowledge

☑ 3 bullet requirements (must-haves, not nice-to-haves)

☑ Delimited context block: Text: /// … ///

☑ Constraints at the end (format, word count, style rules)

☑ Optional: “Think step-by-step” for reasoning-heavy tasks

☑ Remind of scope right at the end (recency)

☑ Ask for self-check: “Verify all constraints before answering”

☑ Include an evaluation line: “If info is missing, ask before proceeding”

☑ Keep total length 150–300 words for moderate tasks

☑ Avoid >500 wordsCopy-ready template (paste into ChatGPT)

Act like a [role/persona].

Your goal is to [outcome] for a [audience].

Task: [one sentence, imperative verb]

Requirements:

1) [requirement 1]

2) [requirement 2]

3) [requirement 3]

Context:

///

[paste only relevant excerpts here]

///

Constraints:

- Format: [bullets/markdown/table]

- Style: [plain, analytical, concise]

- Scope: [include X, exclude Y]

- Reasoning: [Think step-by-step, then answer]

- Self-check: Verify all constraints before final answer.A) Sweet-spot Zero-Shot prompt (≈200) — rewrite a section of your draft

Act like a senior editor for the “How to AI” newsletter. Your goal is to improve clarity, flow, and authority for the section below of my Substack essay: “Your prompt on ChatGPT is too long. Here’s the science behind it.”

Task: Rewrite the section to be crisp, structured, and persuasive while preserving any text I put in triple backticks as verbatim quotes.

Requirements:

1) Keep all triple-backtick content exactly as written.

2) Organize with H2/H3 headings, short paragraphs, and bullet points where useful.

3) Surface the strongest claims as pull quotes and callouts; do not invent statistics.

4) Convert any vague statements into concrete, testable instructions for readers.

5) If a claim seems to need a citation, add “[citation needed]” inline without fabricating sources.

6) End the section with a 3-sentence takeaway and a 5-item checklist.

Context:

///

[paste only the relevant section of my draft here]

///

Constraints (place at end for recency):

- Format: Markdown suitable for Substack

- Length of output: 600–900 words

- Style: direct, plain English, Ruben’s voice; no hype, no emojis

- Scope: preserve meaning and all verbatim quotes; remove redundancy

- Reasoning: Think step-by-step before writing; verify all constraints before final answer

B) Sweet-spot Zero-Shot-CoT (≈210 words) — turn a long source into a crisp reader explainer

Act like a plain-English science explainer for “How to AI.” Your goal is to draft an accessible 2-paragraph summary my readers can grasp in 60 seconds.

Task: From the text below, extract only the ideas needed to explain (1) why overly long prompts degrade results and (2) how to structure prompts for better outcomes.

Requirements:

1) Paragraph 1: the “why” (lost-in-the-middle, distractibility, bloat) in ≤120 words.

2) Paragraph 2: the “how” (layered template + 150–300-word sweet spot) in ≤120 words.

3) Include one boxed mini-checklist with 5 items (≤60 words).

4) Preserve any triple-backtick quotes exactly; do not invent data.

5) If something is uncertain, mark “[uncertain]” rather than guessing.

Context:

///

[paste excerpt(s) or notes here — only what’s relevant]

///

Constraints (end for recency):

- Format: Markdown; include one pull quote if present

- Tone: neutral, clear, useful; no fluff

- Output length: ≤320 words total

- Reasoning: Think step-by-step, then answer

- Self-check: Confirm both goals (why + how) are covered

Before vs after

Too short (fails to constrain):

Summarize why long prompts are bad and how to fix them.

Sweet-spot fix (≈190 words):

Act like my Substack section editor. Improve the passage below about prompt length and structure for everyday ChatGPT users.

Task: Produce a clear, actionable mini-guide that (1) explains why overly long prompts can hurt results and (2) shows readers how to structure prompts for better outcomes.

Requirements:

1) Start with a 1-sentence thesis.

2) Then a bullet list of the 3 main failure modes (distractibility, lost-in-the-middle, bloat) with one line each.

3) Provide a 6-step “layered prompt” recipe.

4) State the practical length rule: 150–300 words for moderate tasks; avoid >500 words.

5) End with a 5-item checklist and one pull quote from any triple-backtick text.

6) Do not invent statistics; mark “[citation needed]” if unsure.

Context:

///

[paste only the essential notes/quotes here; keep triple-backticks around must-keep lines]

///

Constraints:

- Format: Markdown; H3 headings; short lines

- Output length: 350–500 words

- Style: direct, no fluff, Ruben’s voice

- Reasoning: Think step-by-step; verify every requirement before finalYou want to learn how to write quick optimized prompt on the fly.

So let’s dive into section 2.

You are new? Check my archive of guides:

https://docs.google.com/document/d/1pWuMCBVQo1zKcgKltX_BZxAr31KgxmOlp3Vzvmc5Hxc/edit?usp=sharing.

How to write good prompts on the fly, fast.

Here is how I make quick prompts.

My GPT “Prompt Maker” from the video has over a million users.

And for the first time, I am giving away its system prompt.

My (secret) prompt behind my 1M+ user GPT.

You now know how long should be a prompt, and how to quickly write optimized prompts.

Because that’s the key: the right instructions to AI, backed by science.

But you want to go deeper now.

So I shared my system prompt to my 1 million users GPT “Prompt Maker”.

only accessible to paid members